DAGM GCPR | 2025

DAGM German Conference on Pattern Recognition, Freiburg

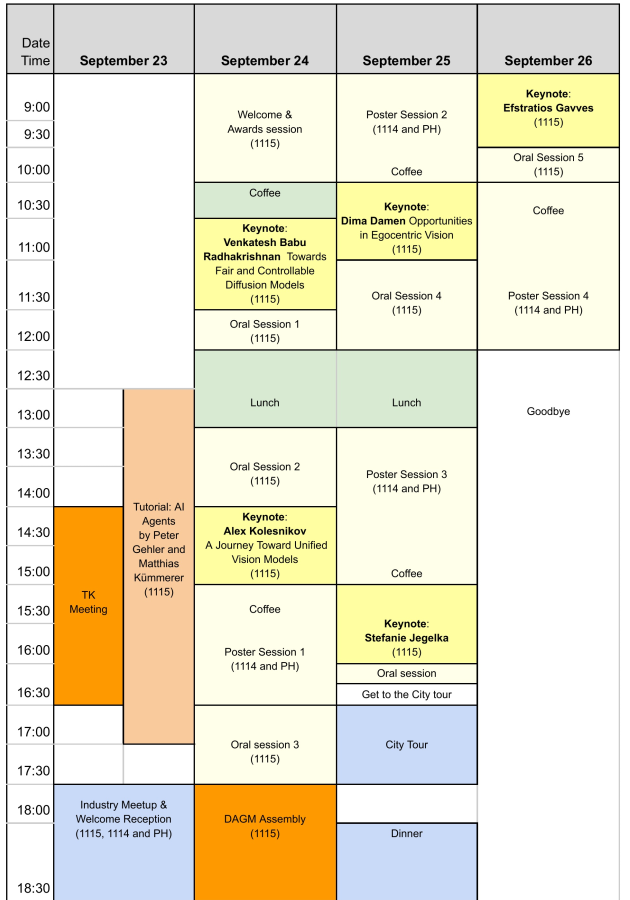

Overview

Tuesday 23.9.

13:00-17:30 Tutorial: AI Agents

Matthias Kümmerer, Linus Schneider, Jaisidh Singh, Robin Ruff, Mamen Chembakasseril, Peter Gehler

13:00-15:00 Presentation

15:00-15:30 Coffee Break

15:30-17:30 Hands-on

Always wanted to learn what AI Agents are about but never got the time? Join our half-day tutorial on LangGraph, LangChain, and the Model Context Protocol (MCP)—three puzzle pieces that snap together to turn large-language-model theory into fully fledged, experiment-ready agents. We open with explanations of all software packages and their concepts and have some examples. Then we dive straight into hands-on hacking where you will write your own AI agent or MCP host.

Bring a laptop, your favorite IDE, and an LLM key (we can provide an LLM key). If you have an idea about what you would like to code, bring that as well, otherwise we have some examples to work on together. This tutorial is both instructional and hands-on, your chance to get started with agentic frameworks. Let’s learn together.

17:30 Welcome Reception and Industry Meet-up

The Welcome Reception is sponsored by Zeiss and the Industry Meet-up is organized and sponsored by the Tübingen AI Center.

17:30 Entry

18:00 Impulse presentation by SPRIN-D

18:20 Company pitches

19:00 Welcome Reception with Industry posters

Participation from Carl Zeiss, MVTec, Zalando, neura-robotics, Bosch, scholar-inbox, KI macht Schule, KI Allianz BW, Deep Scenario, Viscoda, Zebracat AI

Along with the welcome reception of the conference we host an industry meet-up, where local companies, sponsors, and academia can meet and talk. Many German vision professors, PhD and Master students, and researchers will be present. There will be plenty of time to talk with a glass of wine, a bottle of beer, or a bottle of lemonade. We expect 150-200 people.

If you are a local company and you are interested to join, please contact the industry chair.

Wednesday 24.9.

9:00 Opening and Awards

9:00 Opening

9:15 Award talk

10:00 Award talk

10:30 Coffee break

11:00 Keynote: Venkatesh Babu Radhakrishnan - Towards Fair and Controllable Diffusion Models

12:00 Oral Session 1

-

VisualChef: Generating Visual Aids in Cooking via Mask Inpainting

Kuzyk, Oleh; Li, Zuoyue; Pollefeys, Marc; Wang, Xi -

Investigating Structural Pruning and Recovery Techniques for Compressing Multimodal Large Language Models: An Empirical Study

Huang, Yiran; Thede, Lukas; Mancini, Massimiliano; Xu, Wenjia; Akata, Zeynep

12:30 Lunch break

University Cafeteria Rempartstraße 18 (opposite to the conference site)

Bring the voucher from your registration package.

13:30 Afternoon session

13:30 Oral Session 2

-

Assessing Foundation Models for Mold Colony Detection with Limited Training Data

Pichler, Henrik; Keuper, Janis; Copping, Matthew -

Out-of-Distribution Detection in LiDAR Semantic Segmentation Using Epistemic Uncertainty from Hierarchical GMMs

Shojaei Miandashti, Hanieh; Brenner, Claus -

LADB: Latent Aligned Diffusion Bridges for Semi-Supervised Domain Translation

Wang, Xuqin; Wu, Tao; Zhang, Yanfeng; Liu, Lu; Wang, Dong; Sun, Mingwei; Wang, Yongliang; Zeller, Niclas; Cremers, Daniel -

Activation Subspaces for Out-of-Distribution Detection

Zöngür, Barış; Hesse, Robin; Roth, Stefan

14:30 Keynote: Alex Kolesnikov - Title: A Journey Toward Unified Vision Models

15:30 Coffee break

15:30 Poster Session 1

17:00 Oral Session 3

-

Video Object Segmentation-aware Audio Generation

Viertola, Ilpo; Iashin, Vladimir; Rahtu, Esa -

Combining Absolute and Semi-Generalized Relative Poses for Visual Localization

Panek, Vojtech; Sattler, Torsten; Kukelova, Zuzana -

sshELF: Single-Shot Hierarchical Extrapolation of Latent Features for 3D Reconstruction from Sparse-Views

Najafli, Eyvaz; Kästingschäfer, Marius; Bernhard, Sebastian; Brox, Thomas; Geiger, Andreas -

VGGSounder: Audio-Visual Evaluations for Foundation Models

Zverev, Daniil; Wiedemer, Thaddäus; Prabhu, Ameya; Bethge, Matthias; Brendel, Wieland; Koepke, A. Sophia

Thursday 25.9.

9:00 Morning session

9:00 Poster Session 2

10:00 Coffee break

10:30 Keynote: Dima Damen - Opportunities in Egocentric Vision

11:30 Oral Session 4

-

MCUCoder: Adaptive Bitrate Learned Video Compression for IoT Devices

Hojjat, Ali; Haberer, Janek; Landsiedel, Olaf -

CoProU-VO: Combining Projected Uncertainty for End-to-End Unsupervised Monocular Visual Odometry

Xie, Jingchao; Dhaouadi, Oussema; Chen, Weirong; Meier, Johannes; Kaiser, Jacques; Cremers, Daniel -

Deep Learning-Assisted Dynamic Mode Decomposition for Non-resonant Background Removal in CARS Spectroscopy

Chalain Valapil, Adithya Ashok; Messerschmidt, Carl; Shadaydeh, Maha; Schmitt, Michael; Popp, Jürgen; Denzler, Joachim -

Spatial Reasoning with Denoising Models

Wewer, Christopher; Pogodzinski, Bartlomiej; Schiele, Bernt; Lenssen, Jan

12:30 Lunch break

University Cafeteria Rempartstraße 18 (opposite to the conference site)

Bring the voucher from your registration package.

13:30 Afternoon session

13:30 Poster Session 3

15:00 Coffee break

15:30 Keynote: Stefanie Jegelka - Title: Does computational structure tell us about deep learning? Some thoughts and examples

16:30 Oral Session

- Unlocking In-Context Learning for Natural Datasets Beyond Language Modelling, Bratulić, Jelena; Mittal, Sudhanshu; Hoffmann, David; Böhm, Samuel; Schirrmeister, Robin; Ball, Tonio; Rupprecht, Christian; Brox, Thomas

17:00 Guided City Tour

The meeting point will be at "Platz der Alten Synagoge", just next to the conference venue.

18:30 Conference Dinner

The conference dinner is sponsored by Black Forest Labs.

It is at Dattler Schlossbergrestaurant on the Schlossberg with a nice view over Freiburg. You can either walk up the hill to the restaurant or you can take the cog railway from Stadtgarten. See venue for the map. You must bring your badge.

Friday 26.9.

9:00 Morning session

9:00 Keynote: Efstratios Gavves - Cyberphysical World Models and Agents

Abstract: Artificial intelligence has moved from passive perception to active interaction, yet current systems remain limited in their ability to reason about the physical and causal structure of the world. We propose cyberphysical world models as a new paradigm that unites perception with governing mechanisms of dynamics and causality. These models go beyond appearance-based representations by encoding the properties, interactions, and consequences that underpin real-world processes. Building on digital twins, mechanistic neural networks, and scalable causal learning, I will be describing a vision –and recent works—towards embodied agents that can predict outcomes, plan interventions, and adapt through curiosity-driven exploration. The resulting cyberphysical agents will be offering a pathway toward reliable, trustworthy, and autonomous systems, bridging the gap between data-driven learning and the physical world.

10:00 Oral Session 5

-

Using Knowledge Graphs to harvest datasets for efficient CLIP model training

Ging, Simon; Walter, Sebastian; Bratulić, Jelena; Dienert, Johannes; Bast, Hannah; Brox, Thomas -

Feed-Forward SceneDINO for Unsupervised Semantic Scene Completion

Jevtić, Aleksandar; Reich, Christoph; Wimbauer, Felix; Hahn, Oliver; Rupprecht, Christian; Roth, Stefan; Cremers, Daniel

10:30 Coffee break

10:30 Poster Session 4